The conversation about cancer screening is changing within the medical community. Overall, the recent trends have been towards recommending less routine screening, not more. These recommendations are based on an evolving—if counterintuitive—understanding that more screening does not necessarily translate into fewer cancer deaths and that some screening may actually do more harm than good.

For some common cancer types, such as cervical, colorectal, lung, and breast cancer, clinical trials have shown that screening does save lives. However, the amount of benefit is largely misunderstood. For mammography in women aged 50 to 59, for example, more than 1,300 women need to be screened to save one life. Such calculations also do not take into account the potential harms of screening, such as unnecessary and invasive follow-up screening tests or anxiety caused by false-positive results.

Much of the confusion surrounding the benefits of screening comes from interpreting the statistics that are often used to describe the results of screening studies. An improvement in survival—how long a person lives after a cancer diagnosis—among people who have undergone a cancer screening test is often taken to imply that the test saves lives.

But survival cannot be used accurately for this purpose because of several sources of bias.

Lead-Time Bias in Cancer Screening

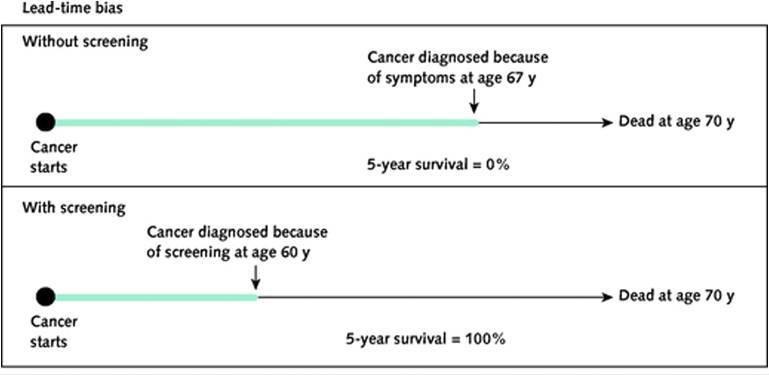

Lead-time bias occurs when screening finds a cancer earlier than that cancer would have been diagnosed because of symptoms but the earlier diagnosis does nothing to change the course of the disease. (See the graphic for further explanation.)

The apparent extreme increase in 5-year survival seen in the graphic "is illusory," explained Lisa Schwartz, M.D., M.S., professor of medicine and co-director of the Center for Medicine and Media at The Dartmouth Institute. "In this example, the man does not live even a second longer. This distortion represents lead-time bias."

Lead-time bias is inherent in any comparison of survival. It makes survival time after screen detection—and, by extension, earlier cancer diagnosis—an inherently inaccurate measure of whether screening saves lives.

Unfortunately, the perception of longer life after detection can be very powerful for doctors, noted Donald Berry, Ph.D., professor of biostatistics at the University of Texas MD Anderson Cancer Center.

"I had a brilliant oncologist say to me, 'Don, you have to understand: 20 years ago, before mammography, I'd see a patient with breast cancer, and 5 years later she was dead. Now, I see breast cancer patients, and 15 years later they're still coming back, they haven't recurred; it's obvious that screening has done wonders,'" he recounted. "And I had to say no—that lead-time bias could completely explain the difference between the two [groups of patients]."

Length Bias and Overdiagnosis in Cancer Screening

Another confounding phenomenon in screening studies is length-biased sampling (or "length bias"). Length bias refers to the fact that screening is more likely to pick up slower-growing, less aggressive cancers, which can exist in the body longer than fast-growing cancers before symptoms develop.

With any screening test "you're going to pick up the slower-growing cancers disproportionately, because the preclinical period when they can be detected by screening but before they cause symptoms—the so-called sojourn time—is longer," explained Dr. Berry.

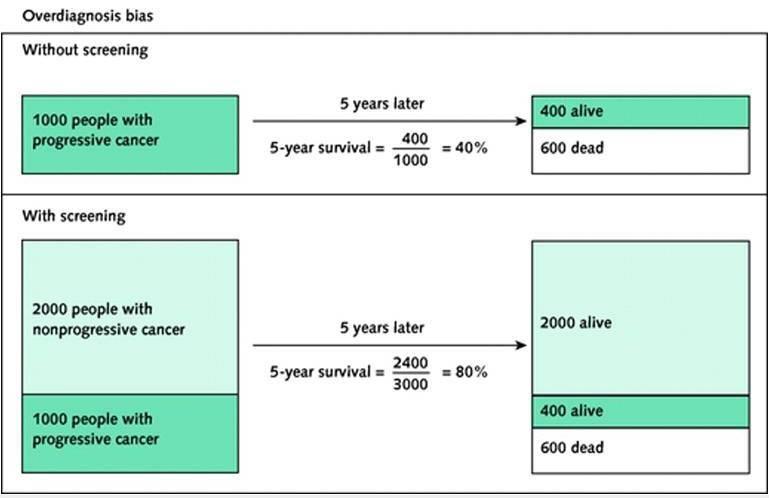

The extreme example of length bias is overdiagnosis, in which a slow-growing cancer found by screening never would have caused harm or required treatment during a patient's lifetime. Because of overdiagnosis, the number of cancers found at an earlier stage is also an inaccurate measure of whether a screening test can save lives. (See the graphic for further explanation.)

The effects of overdiagnosis are usually not as extreme in real life as in the worst-case scenario shown in the graphic: many cancers detected by screening tests do need to be treated. But some do not. For example, studies have estimated that 19% of screen-detected breast cancers and 20% to 50% of screen-detected prostate cancers are overdiagnosed.

How to Measure Lives Saved

Because of these biases, the only reliable way to know if a cancer screening test reduces deaths from cancer is through a randomized trial that shows a reduction in cancer deaths in people assigned to screening compared with people assigned to a control (usual care) group. In the NCI-sponsored randomized National Lung Screening Trial (NLST), for example, screening with low-dose spiral CT scans reduced lung cancer deaths by 15% to 20% relative to chest x-rays in heavy smokers. (Previous studies had shown that screening with chest x-rays does not reduce lung cancer mortality.)

However, improvements in mortality caused by screening often look small—and they are small—because the chance of a person dying from a given cancer is, fortunately, also small. "If the chance of dying from a cancer is small to begin with, there isn't that much risk to reduce. So the effect of even a good screening test has to be small in absolute terms," said Dr. Schwartz.

For example, in the case of NLST, a 20% decrease in the relative risk of dying of lung cancer translated to an approximately 0.4% reduction in lung cancer mortality (from 1.7% in the chest x-ray group to 1.3% in the CT scan group) after about 7 years of follow-up, explained Barry Kramer, M.D., M.P.H., director of NCI's Division of Cancer Prevention.

A study published in 2012 in the Annals of Internal Medicine by Dr. Schwartz and her colleagues showed how these relatively small—but real—reductions in mortality from screening can confuse even experienced doctors when pitted against large—but potentially misleading—improvements in survival.

Tricky Even for Experienced Doctors

To test community physicians' understanding of screening statistics, Dr. Schwartz, Dr. Steven Woloshin (co-director of the Center for Medicine and Media at The Dartmouth Institute and professor of medicine), and their collaborators from the Max Planck Institute for Human Development in Germany developed an online questionnaire based on two hypothetical screening tests. They then administered the questionnaire to 412 doctors specializing in family medicine, internal medicine, or general medicine who had been recruited from the Harris Interactive Physician Panel.

The effects of the two hypothetical tests were described to the participants in two different ways: in terms of 5-year survival and in terms of mortality reduction. The participants also received additional information about the tests, such as the number of cancers detected and the proportion of cancer cases detected at an early stage.

The results of the survey showed widespread misunderstanding. Almost as many doctors (76% of those surveyed) believed—incorrectly—that an improvement in 5-year survival shows that a test saves lives as believed—correctly—that mortality data provides that evidence (81% of those surveyed).

About half of the doctors erroneously thought that simply finding more cases of cancer in a group of people who underwent screening compared with an unscreened group showed that the test saved lives. (In fact, a screening test can save lives only if it advances the time of diagnosis and earlier treatment is more effective than later treatment.) And 68% of the doctors surveyed said they were even more likely to recommend the test if evidence showed that it detected more cancers at an early stage.

The doctors surveyed were also three times more likely to say they would recommend the test supported by irrelevant survival data than the test supported by relevant mortality data.

In short, "the majority of primary care physicians did not know which screening statistics provide reliable evidence on whether screening works," Dr. Schwartz and her colleagues wrote. "They were more likely to recommend a screening test supported by irrelevant evidence…than one supported by the relevant evidence: reduction in cancer mortality with screening."

This lack of understanding can prevent patients from getting the information they need to make informed choices about cancer screening. In a small follow-up study led by one of the researchers, fewer than 10% of patients surveyed said their doctor told them about the potential for overdiagnosis and overtreatment when talking about cancer screening. In contrast, 80% said they would like to receive information from their doctors about the potential harms of screening as well as the benefits.

Teaching the Testers

"In some ways these results weren't surprising, because I don't think [these statistics] are part of the standard medical school curriculum," said Dr. Schwartz.

"When we were in medical school and in residency, this wasn't part of the training," Dr. Woloshin agreed.

"We should be teaching residents and medical students how to correctly interpret these statistics and how to see through exaggeration," added Dr. Schwartz.

Some schools have begun to do this. The University of North Carolina (UNC) School of Medicine provides a course called The Science of Testing to its students, explained Russell Harris, M.D., M.P.H., professor emeritus of medicine at UNC. The course includes modules on 5-year survival and mortality outcomes.

“The majority of students are very appreciative of the course, and a lot of people think: "Gee, we should be continuing this throughout medical school,’” said Dr. Harris.

The UNC team also received a research grant from the Agency for Healthcare Research and Quality to fund a Research Center for Excellence in Clinical Preventive Services from 2011 to 2015. "Part of our mandate was to talk not only to medical students but also to community physicians, to help them begin to understand the pros and cons of screening," said Dr. Harris.

“Gradually, I think we’re getting the word out, but it takes a long time. Some of the things we talk about are counterintuitive, like the idea that it’s not always better to find things earlier rather than later,” he added. “The whole concept of overdiagnosis is not easy, so it’s a long-term process to help these students and practicing physicians understand.”

Drs. Schwartz and Woloshin also think that better training for reporters, advocates, and anyone who disseminates the results of screening studies is essential. "A lot of people see those [news] stories and messages, so people writing them need to understand [what cancer screening statistics really mean]," said Dr. Woloshin.

Patients also need to know the right questions to ask their doctors. "Always ask for the right numbers," he recommended. "You see these ads with numbers like '5-year survival changes from 10% to 90% if you're screened.' But what you always want to ask is: 'What's my chance of dying [from the disease] if I'm screened or if I'm not screened?'"