Stream the episode

Additional Information

Interviewed in this episode:

Dr. Lou Staudt

Dr. Robert Grossman

Dr. Jean Claude Zenklusen

Episode Transcript

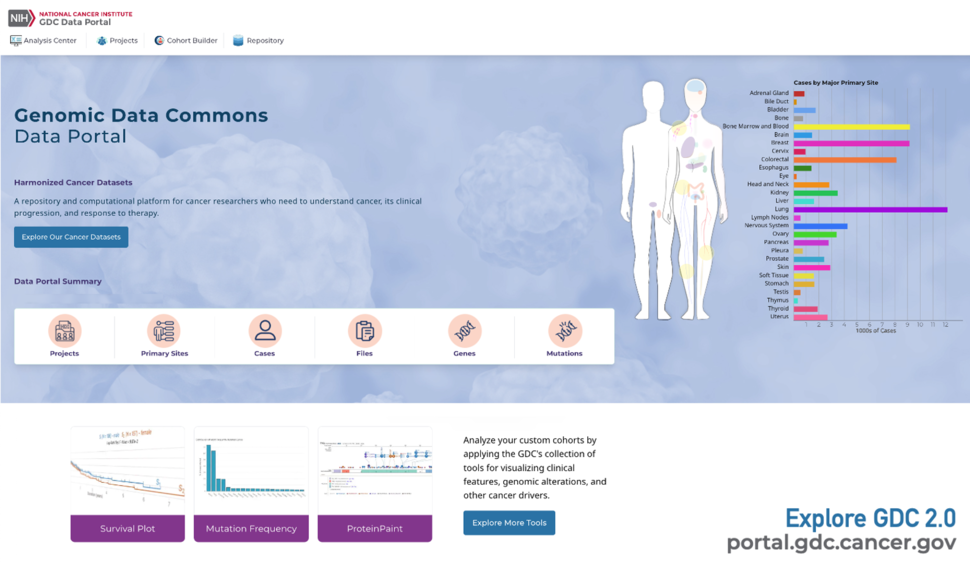

[Melissa Porter] “Welcome to the NCI Center for Cancer Genomics Personal Genomics podcast. In this episode we are going to talk about the National Cancer Institute’s Genomic Data Commons. Also known as the GDC. Which is a massive project to provide the Cancer Research community a repository and computational platform for researchers who need to better understand cancer, its clinical progression and response to therapy. Not at the level of a tumor, but inside DNA molecules, which collectively are considered the instructions for human life. It is an extraordinarily complex endeavor, and we are fortunate to have with us three leaders of the Genomic Data Commons who can explain it all for us.

Doctor Lou Staudt established his laboratory at NCI 36 years ago. That laboratory now focuses on the molecular basis for human lymphoid malignancies and the development of targeted therapies for these cancers. He pioneered the use of gene expression profiling to discover molecularly and clinically distinct cancer subtypes. And to predict response to therapy. Doctor Staudt is Co-Chief of the NCI Lymphoid Malignancies Branch and the director of the NCI Center for Cancer Genomics, which oversees the genomic data comments.

Doctor Jean Claude Zenklusen is the deputy director for the Center for Cancer Genome. In 1996, Dr Zenklusen took a post-doctoral position at the National Genome Research Institute here at the NIH, where he participated in the landmark Human Genome Project. He later became a senior staff scientist in the NCI Neuro Oncology Branch and later served as a scientific program director in the Office of Cancer Genomics. In August 2013, Dr Zenklusen was named the Director of The Cancer Genome Atlas, TCGA, the largest scale cancer genomics project to date and is currently the Deputy Director of the Center for Cancer Genomics.

Joining us from the University of Chicago is Doctor Robert Grossman, who is a person with many titles. Dr Grossman is the Frederick H Rosson Distinguished Service Professor of Medicine and Computer Science and the Jim and Karen Frank Director of the Center for Translational Data Science. He's also the Chief of the section of Biomedical Data Science in the Department of Medicine. He chairs the not-for-profit Open Commons Consortium, which develops and operates clouds to support research in science, medicine, healthcare, and the environment and he's a partner of Analytical Strategy Partners, LLC. If all of those credentials weren't enough, Dr Grossman is here with us because he is the principal investigator on the contract that runs the Genomic Data Commons for the NCI.

I'm Melissa Porter, Director of Operations for NCI Center for Cancer Genomics, and I'm joined by my colleague Rich Folkers from NCI's Office of Communication and Public Liaison, who's going to help guide our conversation today.”

[Rich Folkers] “So let's start with you, Doctor Staudt if we could. You began your career with the NCI, at a time when the decoding of the human genome was a goal, but certainly not a certainty. You clearly have been a witness to a tremendous amount of change. Could you give us a window into how you see the sweep of the history of genomic discovery and how it leads to where you are today?”

[Lou Staudt] “It's been a wild ride in my life through the advent of genomics. I began doing genomics, which to me had the scope of seeing disease processes through the through all the genes that we inherit, not just a single gene. But when I got started, we didn't know where all the genes were, but we were able to capture little snippets of genes in the form of DNA clones, put them on a DNA microarray, and profile various kinds of disease processes, including cancer for the for the expression of these genes, the activity of these genes. And, um, slowly it began to emerge through the great work of the Human Genome Project, what these genes were. Almost every week I would take my collection of sequences of these random clones and use a bioinformatics tool to see whether they had shown up in the databases of genes and what that might tell me about their function. So it's kind of like an emerging form through a miasma that's kind of a cloud of of uncertainty and that then obviously at some point the human genome was completed and to this day, we continue to understand more and more about where the active parts of the genome are and and all that. So so, but, but, but the technologies rapidly advanced to our current state, where we have now in the course of an overnight run on a next gen. sequencer, being able to read the entire genome of a single person or a single tumor biopsy. So I've seen this go from genes 1 by 1 to the whole genome overnight, so it's been pretty exciting.”

[Rich] “Is there, was there at the beginning or am I overstating this? A bit of irrational exuberance that that many believe that all we had to do was sequence the genes and all the dominoes would fall and diseases will be cured one after another. “

[Lou] “I think there is. There was always the hope that one could simply know what what versions of all the genes each individual had, and that could tell you a lot about their disease risk. And indeed it does tell you something about their disease risk, but I was particularly interested in cancer, and cancer is special in that you start with the inherited set of genes that you have, but then a cancer develops and the cancer mutates and amplifies or deletes genes throughout the genome so the cancer genome is a very distorted view of what you inherit from your mother and your father. So there there's so much to learn from cancer genomes about the function. So it's not that we've learned a lot from just the germline sequence of people and their its relationship to disease. A tremendous amount in fact, but in some disease processes like cancer, that's really insufficient.”

[Rich] “So one for you Doctor Zenklusen. One of the big stories about genomics has clearly been The Cancer Genome Atlas. And it was, when it began, a somewhat controversial initiative which some doubted it would ever succeed, or at least would maybe not provide useful information. Suffice to say, those fears were unfounded. But could you summarize the sweep and the value of TCGA?”

[JeanClaude Zenklusen] “Well, as Lou was saying you know when I used to be part of the genome. I was a post doc doing the genome. We were, we were doing those God awful plate sequences which were 400 bases at the time. They drove you totally crazy and and we wondered, you know, how long it is that's going to take and really took took a fair amount of effort. But then when that became available, it became clear that it was going to be a tool to to exploit and, and TCGA, I think is, is the best, best exponent of exploiting the genome because as as Lou said, there is, uh, you know, cancer genome is a very distorted form of your genome because of all the mutations. But the genes were there and so we knew the parts of the clock if you want. But we don't know how they fit together and how they get changed to to distort the clock and and for that you needed TCGA. And when TCGA came along, I think the the doubts were based on the fact that the technology was really, really new. And it was very complicated and it was very difficult to do the analysis. And so the question was, is this the right moment to do this more than if the, the, the initiative was going to give us the data to help The question was, are we ready to do this? And, and I must say, at the level of the pilots, we were borderline ready, uh, we started with certain sequencing technologies that then happened not to work very well. And so, you know, we, we, we kind of use sample and resources in something that was not, not productive, but we learn very fast from our mistakes. One, what's wrong, I always get told when we share our documents and all of our SOPs? ‘Oh, this is amazing. You guys really have it.’ And I always say yeah, but I said this and said we have it because we made every single mistake and we learn from that. And so by the time we had done like four or five tumor types, we really knew how to do it because, all the things that could have wrong, gone wrong, went wrong, and we knew not to do that. And what happened was that now we not only have the parts list, but for every single major tumor type and even some of the rarer cancers, we not only knew what were the parts, but we knew where were the parts that had gone wrong. And so that way it helps you analyze your particular cancer. Without TCGA, people will still be walking in the dark with their hands up front. Now you don't need to do that. We know which genes get modified, amplified, expressed differently, methylated. We don't really know. What that means for the biology because TCGA did not dealt with the biology that much, but but that gives a leg up to anybody that wants to study a particular tumor type knowing that OK, I'm gonna look at these sets of genes because these are the ones that have been identified as being relevant and how do they relate to others. And that saves years of work. And so, so I always say that the best thing TCGA did was not only produced the the resource, but make it publicly available, easy to everybody that wants to use it and, and in a way, that allows pretty much everybody to, uh, to compare to what they know. With the set of samples that are there and, and that's, that's where the GDC comes in basically, it's like we need a way to, to disperse that and I think the GDC not because I’m involved, but I, I think it's really the perfect way to do it.”

[Rich] “So Doctor Grossman, for the benefit of those of us who see Big Data as a three page Excel spreadsheet, could you kind of walk us through what is the GDC, what is it set up to do and why is it so complicated?”

[Robert Grossman] “So the GDC is, uh, a a system of systems, a number of systems and it's role is to manage the data, the genomic and associate data, which includes the the imaging data, the clinical data, the biospecimen data. The role of the GDC is to manage the data, to curate the data, to uniformly analyze the data and then to share it in an easy way with the cancer research community and I'll go through those 1 by 1. The GDC has a large amount of data, um, and it's measured in petabytes and at least when the GDC system started, there were very, very few systems that can manage petabytes of data. These days with cloud computing it's easier, but it was a big lift at the beginning, so one just managing the data.

Two, the data comes from different sites, and a good portion of what TCGA did so well in the GDC continues, is to curate the data so that it's all the data elements are commonly defined and the data is aligned when submitted with those data elements, so the data regardless of what site and which cancer project, so that the data is easy to analyze. And not that many projects can do a good job at scale with curation. But the GDC took it one step further. Genomic data is complicated. There is as as we just heard. Then there are a lot of so-called bioinformatics pipelines. Complicated workflows that have to analyze the data and if an investigator at one university uses one set of pipelines with one set of parameters and gets mutations that they use to try to understand disease progression and another investigator at another university uses another set of pipelines to understand the mutations the mutations may be different. So what the GDC did was to uniformly what's called harmonize the data, run a common set of pipelines over all the data. That itself is a whole system. We call it the the GDC processing system, and it's a very large scale system that each month runs millions of core hours to to uniformly analyze the data and produce data products that are easy for researchers to use so that they, unless they're interested, don't have to go back to the large genomic files. So that's the third thing it does, you know, we manage, we curate, we harmonize.

The fourth thing that does is to make it available in the easy intuitive interface for the researchers to get the data that they want. And to do this at scale, um, we have in some months over in most months these days over 100,000 users each each month to use the GDC. And so, umm, the, the GDC makes, in in a way that lowers the effort for each researcher to make discoveries. Makes available data products at scale from a very large collection of cases to accelerate research in cancer.”

[Rich] “So, Doctor Staudt, how does the GDC as it stands today compared to what in your heart you're hoping it would be when when it launched?”

[Lou] “Right, so when we were conceiving the GDC, uh, it was a very simple uh problem which is that the people in the TCGA project, or rather even a subset of the people within the TCGA project even knew where all the data was that was being generated. And it was in a complicated system, you know? File system where it was names of files with code numbers on them and you had to know the code to find the data that you that you wanted and it was clear when Harold Varmus asked me to take over the Center for Cancer Genomics that our first step is to find all the data so that not just, that that first of all, everybody in the in the project itself, could could, you know, democratically access the data and then since we were, uh, by sort of design, releasing these data into the world? As the TCGA project proceeded, then, we needed to make it in a form that wasn't Byzantine, but was rather findable and usable and as Bob just got through say saying in a in a harmonized format so so that it was a uniform. So I think we easily achieved that. But but it has definitely exceeded my wildest dreams. So if you had asked me, would there be 100,000 people every month trying to get access to this data, downloading two to five petabytes of data, which is 1000 of those, you know, Terabyte hard drives that you that that you sit on your desk, that's a lot of the even one petabyte is a lot of data and and they're downloading several petabytes. That's it's it just shows you how valuable this data is. As JC said this is the reference set that is in some sense from some perspectives, some of this doesn't have to be done again. Umm, this is such a large collection of tumors that it is really giving us a fairly complete view of what the genetic abnormalities are cancer. So on that's in, on that front it has exceeded my expectations, but in another front, um, we're trying to make it meet some new expectations. And so, um, the world of cancer genomics has been taken by storm with the ability not just to look at a single tumor, but to individually look at every cell within that tumor and ask What genes are active? What genes are inactive? What, uh mutations? There may be, uh, changes in the chromosomes. Unbelievable advent of single cell genomics in the last few years. And these data are like the TCGA data on in the early days. They are scattered here, there and everywhere. They have been analyzed in every conceivable analytical platform. And it takes someone of unusual computational skill to bring together different, uh, single cell data set. So what I hope for the future of the GDC is that we can also be a go to repository for single cell data in cancer so that so that researchers of more than modest attainments, but not with a PhD in computer science can actually use these data in in in a way and and in in a way that will immediately help their research. So the thing I want to sort of emphasize is that the GDC is a work in progress. It has been and I think it will continue to be. And for example, one of the things that we're exciting excited about this year is we're going to issue a challenge to the bioinformatics computational biology community. To propose new tools that would help us analyze the data within the GDC and the winner of that challenge will actually have the the prize for that winner will be the use of that tool within the GDC, um and and and so that they will have contributed to this this awesome computational engine that is the GDC. So I think by crowdsourcing we're going to get even more creativity coming into the GDC and, and, and we will continue to grow.

[Rich] So, Doctor Grossman, uh, I don't think it would surprise anyone that the federal government and the large university don't operate in the same manner. In the case of the GDC, though, how would you say that the collaboration between those two is benefiting science?

[Bob] I think it's a a wonderful collaboration. I mean the federal the National Cancer Institute not only produces great science itself in cancer, but funds great projects throughout the US and elsewhere. Those projects when they produce great genomic data. Um. You know that data loads to the GDC. So the the the addition in a regular way of the best data from the best projects funded by the NCI makes the GDC not a static resource, but just as we just heard, a resource that grows constantly and it's just not the new projects which new projects brings bring new data types. We have a lot of new data types, you know, in, you know, most recently single cell. But they're new data types, new analysis algorithms, and so the, the NCI is, you know, is a wonderful focal point for bringing together a critical mass of data.

Conversely, University of Chicago has the ability to build new platforms for managing, analyzing and sharing data that have never been built before. And so we see our primary role as building these platforms and then supporting the best available analysis tools. So that the data products produced are high quality and uniform. And this is itself is a fundamental computer science problem, because at this scale, it's not just analyzing the data, it's at scale it's doing it with the very high level of quality that the GDC demands. So the University um can innovate on the platform, can innovate on how we analyze data at this scale and can innovate on how we make this data available to the community. And we haven't discussed so far, but you know the the one of the blue ribbon panel reports had as a recommendation creating a data ecosystem and I think one of the most important decisions was the GDC was designed and we were one of the first systems out there to take this approach with what are called what are now called fair APIs that these are ways for other systems that analyze data um. With security and compliance to find, access, interoperate and reuse data. This is now a sort of a common way of building a platforms. But when the GDC was designed in 2010 to 2012 with the early prototypes and in 2014 to 2016 with what became the GDC, it was one of the um, original platforms to advocate to, uh, to support and advocate these fair API. What that means is the GDC is just not used to analyze and share the data products. It's used as a hub a center of a whole data ecosystem where people from their own platforms, where people from platforms and related spaces, spaces, you know, data Commons, data repositories and related spaces, and even on their desktop can access the data not through the user interface, which many users do, but through these APIs. And so that created a very important data ecosystem, one of the first out there and I think it's, it's often easy to forget you look at the fancy interface, but there this is all done over these same API's that drive this rich ecosystem of applications and it encourages ingenuity in the cancer research community because they can build their own platforms and access data and analysis results from the GDC. So I think you know the the innovation and you know the GDC consists of a long line of firsts in terms of how to build platforms. To accelerate research for the scientific community, including the core design, which is now replicated in a lot, in most of the platforms out there, as well as this open ecosystem design, which unfortunately is not replicated I think as often as it should be. So I think it's a wonderful partnership on both sides benefit and we couldn't do it. I don't think either side could do it by themselves.

[Lou] Rich, could I add something to that please? That that we neglected to say so far. So this is a really a tripartite collaboration. Uh, it's between the, uh, NCI government, Center for Cancer Genomics leading but many parts of the government, NCI, the Frederick National Labs, which is one of our major contractors and the University of Chicago. You asked why this works, well, it works because what we wanted was to have the best system that we could come up with in the United States and, and ultimately the world and so we put out a call for proposals and we found the most outstanding proposal and most outstanding team at the University of Chicago. So so that is why we create this partnership just to hit our mark of excellence. And I just wanted to get in that this really has been an important contribution of the Frederick National Lab in making the GDC success.

[Bob] Yeah, Frederick has played an absolutely essential role and the, you know, we each rely on each other to build the best possible system and I think in terms of its usage, it's clear it's the leading system in the world to support cancer genomics research.

[Rich] So Dr. Zenklusen, Dr. Grossman was talking about this as an ecosystem. In building that ecosystem, what would you say are some of the most significant roadblocks you've had to overcome?

[JC] Uh, well, as as Lou said before the GDC, uh, the data existed in basically was Grandma's attic of data. It was a big trunk where all the data was, but you could only find it if you knew what you were looking. So it was it was not very user friendly. I remember the other thing that happened when I became the director we had an AACR plenary session where we spent an hour and a half telling people how to find the files. Uh, you should not find the files by having an hour and a half tutorial. And I'll tell you at the end of that hour I have to tell you, people still didn't know how to find. Uh, and so only certain the very large groups could do it. And, and that's what I call the Country Club model. I don't like the Country Club model. I like the democratization of state and that's the main thing that the GDC has done, but, but the biggest, I think the biggest hurdle at the beginning was find all the data and transport it from where it was to the GDC, these we are talking at the time where you know transporting this amount of data will break the Internet. We actually broke it three times. We broke nodes that couldn't operate anymore because we were pushing too much in there. It took, I don't know, Bob you, you may correct me, but it took what about four to six months to transfer everything so that, that, that, that was an enormous effort. And then organize that, you know, put it together so we could find it that that was a big deal. Um, and, and, and a big hurdle. One thing that that Bob alluded to this harmonization, it tends to get glossed over. Because people say it's OK, they process. It's like, well, let me, let me, let me tell you something that we did when, when I got to TCGA, I, we had three groups that were doing the processing and then the, the mutation calling. And what I ask is, OK, let's take this project, you all call it and then we see what's the overlap. Well, we did that. I almost died from a heart attack because the overlap was 3%. OK, so you can imagine if we released the data to the wild without having all these steps in the middle. Many people will not be able to to to to compare their results and so that was the other big problem to be solved is how do we call these things? What are the pipelines that are really mature to use in order to have a product that is reliable? That is consistent. And, and doing that allow for what we call the PanCAN Atlas analysis, which was published in 2018 in in 30 all the papers in in in the cell family of publications and, and when we were doing that, it took us just two years to do that analysis while other groups were trying to do the same thing and they finished two years after we did. And the only reason we did it faster, it was because all the data looked the same, had been processed the same way, and we could do the analysis and comparison without spending three years reharmonizing the data. And so this is something that sometimes people don't don't realize. How important this is and also one of the things that the GDC has done. And again, it's one of those things that don't have, you know, page 1 notice, is when the versions of the genome changed you have to change the data you have to recall the data with the new version of the genome, and changing from the version of the genome that we started to the version of the genome that we are here was an immense lift to really a year. Now, again, we learn how to do it now next time we do it, it's not going to take forever. Uh, but, but they had to modify everything in order to do that. And what that does actually is keeps the data fresh. Because what happens many times is when you look at data that has been done like 10 years ago, which is still good data. But it's stale, it doesn't work in your analysis because it was done with the version of the genome that is not the version that we have in it. And so all of these things are immense work and take a lot of time and they are big bottlenecks to the everyday work, but they are absolutely necessary. And, and I will say they, the community at all, uh, doesn't notice it, uh, because did you see, does it so well that it's unnoticeable, but it's, it's a big amount of work without which the data really would not be as usable as it is.

[Rich] Melissa, do you wanna have a chance to hop in?

[Melissa] Um. Well, I just wanted to highlight the last thing that JC said about how the fact that GDC does it so well that people don't realize like it's, it's, you know, they, you make use of the GDC, and it's easy and it looks seamless, and that is because of all of the hard work and the expertise I think that we have working with the GDC.

One thing and I, I think you touched on it earlier, um, but Lou, it would be interesting to hear if you and, and JC and, and Bob as well. Talk a little bit about just back to the beginning before the GDC was the GDC and how did all of that come about? I'm sure there are some interesting stories behind, you know, how we got to the GDC.

[Lou] Ohh. Yeah, there, there, there were and um, I guess. The one story that I think is reflects well on on everyone is that I had this idea and I had it when I was talking with Kenna Shaw and who was then directing the TCGA project and she was really bemoaning the fact that the data was so difficult to to use. And I said we should fix this and I started talking about it to our then director Harold Varmus, and he immediately resonated with the idea. And then I said, um, well, but Harold, that means that we'll need $10 million. And he said, ohh, OK, yeah. And then I said, but we also need $10 million next year. And he really, he really took a step back and thought about it because that is even for the National Cancer Institute a large, a large chunk of change and it means that we were making a very significant investment umm in, in the, in the GDC. But Harold had a, a great vision that this is something we should do.

[Bob] I I just want to make 2 quick comments. Um, there's a saying in technology that the best technologies are invisible. And if you think about what Lou and JC described, people don't understand the work for their curation, they don't understand the work for the harmonization, they don't understand the work on the QC because we've made it invisible and I think. You know, there's no reason the community needs to understand that it the the genius of the GDC, is that the hard parts are invisible so that they could support the acceleration of cancer research. I think the other thing is you told the story about, you know, trying to get the funding for the GDC. Um, something important to keep in mind if the GDC was, um, was as in just initially thought of and I, I've recently looked at some of the early design pictures that were, were shared with the community. It wouldn't have that many users. It's just they're not that many users. What the GDC did and this doesn't happen very often in technology. It created a whole new platform that allowed people to work and make discovery and and work with cancer data in ways that they hadn't been able to before. So it created a whole new community of users, a whole new community and ecosystem of applications that fundamentally changed the way that you worked with cancer data. As is, as JC said, it took a democratized access, so lots of people could do lots of things with data they couldn't do before and so I think it's easy to forget these two points, especially since, you know often times we are hesitant to make expenditures because we just think of what we can do today versus what you could do with generally new systems. And I wish there were more systems that push boundaries like GDC. But it, it, it, it takes a rare combination, you know. It took NCI, Leidos and the University of Chicago working quite hard for a number of years to to not only bring this out, but to improve it each year.

[Lou] The one other story that I'll tell that's that it just came to mind was we, you know, meet quite often all as a team, the NCI, Frederick National Lab, Chicago, sometimes in person to really dive deep into what and so I think the story I'm gonna tell is how little naivete is helpful. So I use computers, I don't know much about how you create computer systems, and so I came up with the idea that seems simple to me, that, you know, the analysis of data, which we've said over and over again is very complicated, isn't even done with one computer program. It's it's done with a series of computer programs that hand off data from one to another one, they they just sort of work in a in a consecutive fashion and typically a human would intervene and reformat the data in the way that the next system could use it and it was a cumbersome project. So I said to Bob and team as well, my idea is the data should come off a DNA sequencer and then you push a button and out the other end you get the mutations that are in that data and isn't that how the GDC works? I sort of thought the GDC already worked that way. And as Bob just said, our original conception of it, it didn't work that way. And because it didn't work that way it was slow and cumbersome to get the analysis done in the early days and then over the course of one or two years, the team at University of Chicago did in fact more or less develop a button to be pushed that leads to takes takes DNA out one end and gives you the answer and the drivers of cancer, the mutational drivers of cancer out the other end. So. So again, the GDC is a bit like a very skilled magician with amazing slight of hand that does amazing tricks and you're not quite sure how they did it. But at some point you just want to be amazed and you don't think too much about how the trick actually worked. So again, to bring it back, it helps to have some cancer biologists who have a desire to do something but not quite the computer science wherewithal to carry it out, to give some ideas about what what the design could hope to be. And so it's been a very good partnership all along.

[JC] Yeah, I must say that I think the genius of the GDC is what Bob was saying about all those parts that must be done, but nobody cares to know how they are done, they're invisible. And so, you know, I know I, I would say 98% of the cancer biologists and cancer researchers that I know, they don't care to know how the computer does it. They just want to ask the question and get the results and get the results consistently. And the fact that the GDC was able to do that in a seemingly simple way, uh, contributes to their success because people use it and then they go and they tell the person that is working next in the next bench saying, hey, have you tried this? This is, this is really easy. You don't need to read the manual. It's intuitive. You just go and tell what you're interesting to do and there you go. It's, you know, it's scientific magic. It's I always remember the far side cartoon that they start with some equations and they get to the result and in the middle it says some magic happens and this is exactly what the GDC is doing. You know, it's it's the the middle of the box that if you are interested, all the documentation is there. You can go and look at it. But the majority of cancer researchers don't care how it happened, they just care that it happens and it happens easily and that they don't have to have a humongous bioinformatics department in their institution in order to do the analysis. So that's that's what the GDC is doing. Its being that humongous by informatics department for everyone.